Scientists across industries are looking for ways to leverage the latest breakthroughs in AI technology to solve common problems in their field. Researchers at the University of Washington (UW) have taken a novel approach to noise pollution, applying AI to a standard pair of headphones to eliminate background noise from a face-to-face conversation. The result is a pair of headphones that detect when the wearer looks at an individual, and then filters out all noise other than the targeted individual’s speech. The team calls their method “target speech hearing” (THS), and have authored a study on their findings.

New spatial displays allow real-time 3D development without a headset

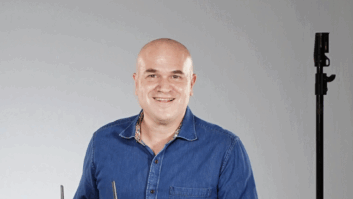

“We tend to think of AI now as web-based chatbots that answer questions,” said Professor at UW’s Paul G. Allen School of Computer Science and Engineering Shyam Gollakota. “But in this project, we develop AI to modify the auditory perception of anyone wearing headphones, given their preferences. With our devices you can now hear a single speaker clear even if you are in a noisy environment with lots of other people talking.”

Currently, there are no plans on a new product developed by the research team to hit the market, but they have made their code available for free via GitHub so that interested parties can build their own pair of THS headphones.