There’s a twist to eSports production. It’s virtual first, but at the tournament level it’s also live. The aesthetic is based on fantasy worlds that fans and players know intimately and are deeply attached to. So, Esports production must provide the thrill and immersion of a familiar virtual world and the very different, visceral immersion of a live event. Both the in-person and at-home experience take into consideration an audience that is used to monitors and headphones.

When Covid cancelled the 2021 Defense of the Ancients 2 (DOTA 2) live world championship at the last minute, the delay postponed the debut of d&b Soundscape as a planned enhancement to the live proceedings. In November, fans finally got a the chance to experience Soundscape in person at the Singapore Indoor Stadium.

Currently in its eleventh iteration and dubbed ‘The International’, this annual esports world championship tournament is hosted by Valve, the game’s developer, and is considered one of esports biggest events with a reported prize pool of $18.9 million. Sound designer and owner of postproduction and live events agency Aural Fixation, Jason Waggoner has served as the live audio sound designer and department lead for the tournament since 2018, with d&b consistently being the audio manufacturer of choice.

The d&b system is primarily focused on game sounds, announcer commentary, and video playback in the arena, as well as supporting live entertainment elements when called for. While d&b has been part of the event for several years, Soundscape was specified just last year.

The d&b system is primarily focused on game sounds, announcer commentary, and video playback in the arena, as well as supporting live entertainment elements when called for. While d&b has been part of the event for several years, Soundscape was specified just last year.

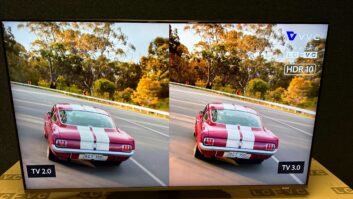

For Valve, the game audio in the arena needed to sound as close as possible to what it would be like if the audience were wearing headphones or watching at home. “Soundscape gave the audience not only a better overall experience but a more homogenous one to what they’re used to,” commented Waggoner.

“This audience is used to watching at home on Twitch and YouTube, probably on headphones, and so we needed to deliver something more akin to that experience but at the same time had all the excitement of a live show.”

As part of d&b’s ArrayCalc, Waggoner used d&b’s Soundscape Simulation software. The visualization tool is designed to accurately model a Soundscape system’s real and perceived acoustical performance within a space. The En-Scene simulation tool allows d&b users to evaluate how the spatialization created with a Soundscape system will be experienced, which helps audio technicians optimize system design early in a production’s planning phase.

“This feature allowed me to deploy the system in a non-traditional way and have a lot of flexibility in how the content was delivered to the audience,” said Waggoner.

“This feature allowed me to deploy the system in a non-traditional way and have a lot of flexibility in how the content was delivered to the audience,” said Waggoner.

“The way Waggoner distributed the game sounds in Soundscape gave them a deeper and wider image than a traditional distribution, especially juxtaposed against our very forward “mono” vocal channel which was evenly distributed (also thanks to Soundscape) across all sources,” commented Ian Davidson, FoH engineer at the tournament. “The result was a subtle, but very effective immersive experience.”

Waggoner’s audio team also complemented Soundscape with d&b V series and ArrayProcessing. One of the major challenges of the audio production of this competition is working to keep as much audio as possible off the stage itself. The show that happens on Twitch is the same show that happens in the arena, so if that sound is getting onto the stage and players can hear it, they may pick up something going on in a different part of the game which would potentially give them an unfair advantage.

To help prevent that from happening, Waggoner says the pattern control and cardioid nature of the V series was critical to the system’s success in the arena.

Additionally, the team used ArrayProcessing, an optional software function within the d&b ArrayCalc simulation software. ArrayProcessing uses an optimization algorithm to determine tailored filters helping control the behavior of a d&b line array system arranged in a LRC configuration across an entire listening area within the 12,000-seat stadium.

“Being able to use ArrayProcessing is pretty crucial because of the even coverage that you get and the more consistent frequency response across the audience area. But it’s also very effective for something else unique to Esports: the play-by-play commentary is in the arena, not in a booth like they would be for a traditional sports broadcast,” explained Waggoner. “Using the cancellation functions within ArrayProcessing helps prevent as much extraneous audio as possible from echoing into the commentators’ headsets. That’s critical for broadcast.

“This production is really about rewarding fans for their loyalty with this unique immersive sound experience. Every year there’s a lot of focus and a lot of energy put into the live production and trying to find ways to create an arena setup that is maybe a little bit beyond just a normal sports event.”

Just as the audio for Dota 2 drew on virtual and in-person aesthetics, the visuals excelled with what is believed to be Asia’s first complete LED (with ceiling) virtual production, a collaboration with Mo-Sys Engineering and regional virtual production partner Cgangs. The LED volume was powered by Mo-Sys’ VP Pro XR, in combination with Mo-Sys StarTracker camera tracking. The entire system was integrated with real-time DMX controlled lighting and an LED ceiling to increase the production value and to assist the cinematographer by providing real-time ‘scene spill’ on the talent. Cgangs was selected by American game developer Valve to handle the production of the opening sequence and the team player introduction videos.

Just as the audio for Dota 2 drew on virtual and in-person aesthetics, the visuals excelled with what is believed to be Asia’s first complete LED (with ceiling) virtual production, a collaboration with Mo-Sys Engineering and regional virtual production partner Cgangs. The LED volume was powered by Mo-Sys’ VP Pro XR, in combination with Mo-Sys StarTracker camera tracking. The entire system was integrated with real-time DMX controlled lighting and an LED ceiling to increase the production value and to assist the cinematographer by providing real-time ‘scene spill’ on the talent. Cgangs was selected by American game developer Valve to handle the production of the opening sequence and the team player introduction videos.

Alvin Lim, Cgangs’ Strategic Partnership and Business Development Director explains: “The project made extensive use of Live AR, and it all needed to be shot in a single day without post-production. We only had about 1.5 hours per team to shoot and capture the opening and intro videos, which meant we were shooting real-time ICVFX and needed rock-solid camera tracking and a powerful LED content server solution that wouldn’t let us down.”

Cgangs utilized Mo-Sys’ StarTracker optical camera tracking and VP Pro XR, its cost-effective LED content server solution designed specifically for film and broadcast production. Mo-Sys also introduced Garden Studios who provided expert on-site technical support.

Alvin added: “We had limited time onset so needed to work quickly. We were able to do this after ample pre-production planning and selecting products that would enable rapid ICVFX production. StarTracker and VP Pro XR were perfect for this project, in terms of set-up, workflow and reliable performance. It was also a great pleasure to work with the DOP from Valve, who was using LED virtual production for the first time. He had a very clear vision and worked collaboratively with our technical team to achieve it, and this was essential to making the project a successful one.”

Alvin added: “We had limited time onset so needed to work quickly. We were able to do this after ample pre-production planning and selecting products that would enable rapid ICVFX production. StarTracker and VP Pro XR were perfect for this project, in terms of set-up, workflow and reliable performance. It was also a great pleasure to work with the DOP from Valve, who was using LED virtual production for the first time. He had a very clear vision and worked collaboratively with our technical team to achieve it, and this was essential to making the project a successful one.”