My first experience with NDI, NewTek’s software platform for video over IP, was to install the software tools on my office computers and see how it behaved. Very cool, actually. Sending a live video stream, and some colorbars, between the two computers was fairly painless. On my home network the NDI apps found each other, signals moved–it was impressive. But also really simple.

My first experience with NDI, NewTek’s software platform for video over IP, was to install the software tools on my office computers and see how it behaved. Very cool, actually. Sending a live video stream, and some colorbars, between the two computers was fairly painless. On my home network the NDI apps found each other, signals moved–it was impressive. But also really simple.

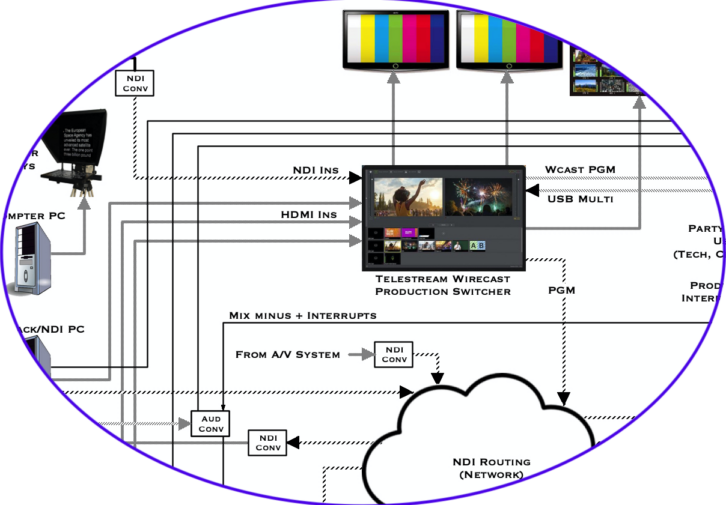

Soon after that, I got involved with a rather complex hybrid event project where NDI seemed like a good way to move a lot of signals around–doing away with most point-to-point SDI wiring. NDI could essentially take the place of an SDI matrix router, sending signals wherever they needed to go over the Ethernet network. Flexibility and ad-hoc configurations are areas where video-over-IP can be a win.

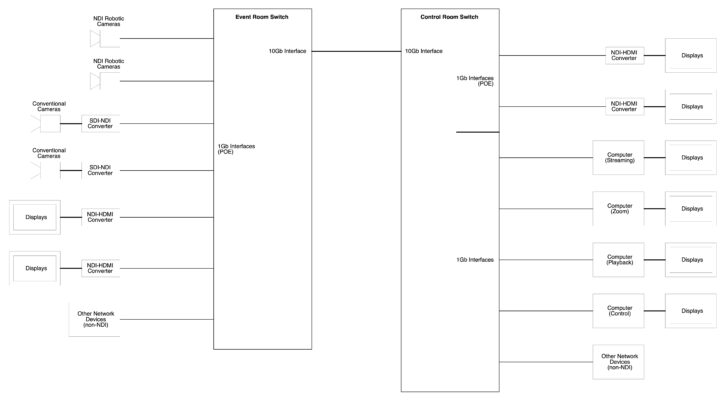

In this case, there are at least four cameras and four displays in the event space. These are connected to a control room with computers for a software production switcher (live stream), several Zoom participants, video playback, on-stage videowall, and tech control. Plus more displays. Since this is a high-quality production environment, standard NDI compression is used, at roughly 130Mb/sec for 1080p60. The system includes a large number of NDI-HDMI and NDI-SDI converters in both rooms.

Production Network

The “production network” for this system is part of a VLAN in the organization’s overall network but has its own switches for all the A/V and control traffic. Switches are the heart of a network, and a major consideration for deploying NDI on a large scale. Here we have two large NETGEAR M4300-series switches, one in the event space and one in the control room. The switches have lots of 1Gb ports and are linked via their 10Gb ports to provide a large pipe between the rooms. CAT6A cable works just fine at the distance involved, but the switches also support fiber SFPs.

Managed switches, with solid multicast support, are pretty much required in order to reduce stream duplication and ensure mDNS for device identification. The backplane bandwidth of the switch needs to be sufficient for all the expected traffic on the 1G and 10G ports, which is another reason why robust switches are necessary. Both switches also support POE on half the ports, which is helpful (almost required) for all of the NDI cameras and converters deployed. (Note that POE is really convenient but comes with its own caveats for power capacity of the ports and the switches overall.)

With NDI, the ability for a receiving device to simply “ask” for a stream from a sending device is both a blessing and curse. It’s easy to get signals where you need them, but every new connection could be another 130Mb stream in some part of the network. Depending on network topology there may be choke points where too many streams cause trouble.

An example of this is a computer running software to create multiviews (such as Birddog’s NDI Multiview app). Every source that appears in a multiview grid is an incoming stream to that computer, as are the multiview outputs to displays. So an 8-source window is potentially using over 1000Mb of data throughput on the computer’s NIC and the switch port, far exceeding the 800Mb typically recommended for a 1Gb interface. Would it make sense to do multiview with the more-compressed NDIHX? Maybe, but that assumes the devices involved support it (and can produce both full and HX streams at the same time, in the case of cameras). These setups can easily require connectivity greater than 1Gb, as well as a fairly powerful computer for all the encoding and decoding.

The key point is that making NDI connections willy-nilly is not without consequence; if you see the video, there’s a stream coming from somewhere. Multicast may save some bandwidth on the source side, but every receiver of that source is still getting its own stream from a switch. Of course, manufacturers are touting 4K NDI products, which is great if you need it, but exacts a huge price on bandwidth (250 – 400Mb/s per stream).

I’ll also note that while the “plug and play” approach of NDI is nice initially, it can be tedious with a large number of endpoints. Sources and destinations don’t appear instantly and it’s not always clear who’s who. Within NDI applications, such as Birddog’s Central control app, endpoints use their system names. Clicking one (say for a camera) will open that device’s configuration page in a browser. But if you want to access the config page without using an NDI app you must know the device’s IP address. So assigning static addresses, and keeping an accurate list, is advisable. (I do not know if using the NDI Discovery Service would alleviate this.)

Difficulties

Except for the NDI Tools package, the project described here uses mostly P200 robotic cameras, Studio NDI and Mini converters, and software from NDI-centric manufacturer BirdDog, due to their comprehensive product line (and availability at the time). There are also some Panasonic production cameras with SDI-NDI converters. Displays are fed with HDMI or SDI via more converters. While many aspects of the system worked fine from the outset, problems that arose included video stuttering, NDI endpoints not showing up in software applications, unresponsive or crashing software, and simply general confusion about how things were supposed to work.

I suspect that one underlying reason for the difficulties is the fact that NewTek owns the platform specification and is free to change it. Every NDI endpoint–hardware or software–uses the NDI core software, and evolution of the NDI core may or may not sit well with products from other manufacturers, who must play catch-up (much like computer applications and operating system changes).

Some products were simply new or recently released, which primarily manifested as inconsistent behavior or functions not working. But also by uncertainty about how hardware and software should behave, and how certain parameters should be set. Adjusting to changes in NewTek’s NDI core could account for some of this, and I expect product maturity has improved things as well.

It is also the case that features touted by NewTek, or anyone else, may depend on a particular version of NDI, or particular configurations within devices. It’s fairly easy to inadvertently end up with slightly incompatible components. We were building a system initially in 2021, during the break-in of NDI 4, and at one point had to review all the NDI endpoints and make sure everything was on compatible versions. Naturally NDI 5 began showing up soon enough, but it was clear that immediately upgrading any of the components would be unwise. The “latest and greatest’ is not always best.

Compatibility is also an issue with the protocols supported by network switches and NICs, and the variations of those protocols. NDI depends greatly on some protocols, like mDNS, that may not be fully implemented in some equipment, so old hardware might cause trouble. In the course of shaking things out for this system, the switches were updated to newer firmware with features and setups specifically for A/V over IP. This reduced headaches caused by subtle feature mismatches, or having an out-of-date version of some obscure underlying protocol. Tech support at the companies making these switches is also better equipped to assist with problems specific to A/V over IP. (Kudos to NETGEAR for taking this on because A/V over IP–including NDI, Dante, AVB and others–is dependent on the correct functioning of Ethernet switches.)

When this project began, we found a handful of docs from NewTek and other manufacturers which attempted to recommend best practices for an NDI system. Advice often conflicted, or mentioned concepts that were not quite explained. This created confusion in troubleshooting video issues because it was hard to determine if there was a problem with an NDI endpoint, the switch firmware, the switch configuration, or if we were just trying to do something that could not work! I will add that bad cables–always the first line of tech support advice—were never a problem (and rarely are if you’re conscientious).

To be frank, sometimes I had the impression that nobody fully understood NDI–except perhaps the design engineers at NewTek (who I did not expect to be able to reach). But, like so many new technologies, things are stabilizing. A white paper issued in June 2021 summarizes various aspects of how NDI works. It’s a bit unfinished in places but generally useful if you understand networking concepts.

In the end, it was a combination of upgrading network switches, upgrading computers, maintaining software compatibility, and persistent attention to switch configuration that cleared up most issues over the course of a couple months. Needless to say, this was “one man’s experience” on a particular project, so others may take exception. Troubleshooting tools are becoming available, but the networking aspects of NDI are not insignificant, particularly when deployed in a large system.

Demand for the kind of functionality provided by NDI has risen quickly, and so has the number of companies adopting NDI in their products. NDI provides a useful “middle ground” between SDI and ST2110 (or other video-over-IP approaches that are not tailored toward production). I will certainly consider using NDI where it fits, knowing that implementation might be more complicated than expected.