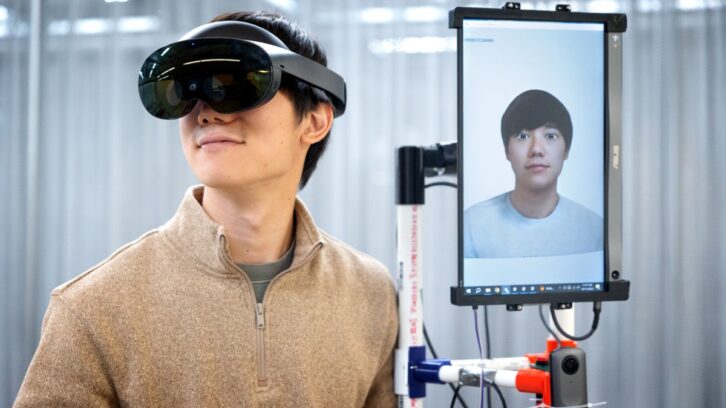

Researchers at Cornell and Brown University have developed a telepresence robot system that has removed the pitfalls of options that are currently available. The system responds to a user’s movements in real time, bringing much more value than a simple chatbot on a rolling tablet.

WATCH: Disney’s new Star Wars droids surprise park goers

The VRoxy system, as its known, auto pilots to different locations in a set space, forgoing the need for the user to steer from location to location, which also eliminates the unwanted vertigo effect that can occur when the user watches a first-person perspective feed of the robot roaming the halls. The user can toggle between a live view from the robot’s 360-degree camera and a pre-scanned 3D map view of the area. A location on the map view can then simply be selected to prompt the robot to relocate to that space.

The connection between the user and the robot avatar that is displayed is several steps more advanced than current offerings, with the VR headset monitoring the user’s facial expressions and eye movements and replicating them in real time on the avatar. Head movements are also replicated by panning or tilting the screen by taking advantage of an articulated mount. Hand tracking is also present, with the robot’s finger following the user’s finger to point at anything within the user’s field of view. The researchers have commented on their intent to eventually equip the robot with two arms that will be completely controlled by the user.

The team’s research has been published in a paper that was presented at the ACM Symposium on User Interface Software and Technology in San Francisco.